- VMware Fault Tolerance: What it is and how it works

- New SiteSurvey utility from VMware checks for Fault Tolerance compatibility

- More details on VMware’s Fault Tolerance feature

I. And VMware said, ‘Let there be Fault Tolerance’

Fault Tolerance was introduced as a new feature in vSphere that

provided something that was missing in VMware Infrastructure 3 (VI3),

the ability to have continuous availability for a virtual machine in

case of a host failure. High Availability (HA) was a feature introduced

in VI3 to protect against host failures, but it caused the VM to go down

for a short period of time while it was restarted on another host. FT

takes that to the next level and guarantees the VM stays operational

during a host failure by keeping a secondary copy of it running on

another host server. If a host fails, the secondary VM becomes the

primary VM and a new secondary is created on another functional host.

The primary VM and secondary VM stay in sync with each other by using

a technology called Record/Replay that was first introduced with VMware

Workstation. Record/Replay works by recording the computer execution on

a VM and saving it as a log file. It can then take that recorded

information and replay it on another VM to have a replica copy that is a

duplicate of the original VM.

II. Power to the processors

II. Power to the processors

The technology behind the Record/Replay functionality is built in to

certain models of Intel and AMD processors. VMware calls it vLockstep.

This technology required Intel and AMD to make changes to both the

performance counter architecture and virtualization hardware assists

(Intel VT and AMD-V) that are inside the physical processors. Because of

this, only newer processors support the FT feature. This includes the

third-gen AMD Opteron based on the AMD Barcelona, Budapest and Shanghai

processor families, and Intel Xeon processors based on the Penryn and

Nehalem micro-architectures and their successors. VMware has published a

knowledgebase article that provides more details on this.

III. But how does it do that?

III. But how does it do that?

FT works by creating a secondary VM on another ESX host that shares

the same virtual disk file as the primary VM, and then transferring the

CPU and virtual device inputs from the primary VM (record) to the

secondary VM (replay) via a FT logging network interface card (NIC) so

it is in sync with the primary VM and ready to take over in case of a

failure. While both the primary and secondary VMs receive the same

inputs, only the primary VM produces output such as disk writes and

network transmits. The secondary VM’s output is suppressed by the

hypervisor and is not on the network until it becomes a primary VM, so

essentially both VMs function as a single VM.

It’s important to note that not everything that happens on the

primary VM is copied to the secondary VM. There are certain actions and

instructions that are not relevant to the secondary VM, and to record

everything would take up a huge amount of disk space and processing

power. Instead, only non-deterministic events are recorded, which

include inputs to the VM (disk reads, received network traffic,

keystrokes, mouse clicks, etc.,) and certain CPU events (RDTSC,

interrupts, etc.). Inputs are then fed to the secondary VM at the same

execution point so it is in exactly the same state as the primary VM.

The information from the primary VM is copied to the secondary VM

using a special logging network that is configured on each host server.

This requires a dedicated gigabit NIC for the FT Logging traffic

(although not a hard requirement, this is highly recommended). You could

use a shared NIC for FT Logging for small or test/dev environments and

for testing the feature. The information that is sent over the FT

Logging network between the host can be very intensive depending on the

operation of the VM.

VMware has a formula that you can use to determine this:

VMware FT logging bandwidth ~= (Avg disk reads (MB/s) x 8 + Avg network input (Mbps)) x 1.2 [20% headroom]

To get the VM statistics needed for this formula you need to use the

performance metrics that are supplied in the vSphere client. The 20%

headroom is to allow for CPU events that also need to be transmitted and

are not included in the formula. Note that disk or network writes are

not used by FT as these do not factor in to the state of the virtual

machine.

As you can see, disk reads will typically take up the most bandwidth.

If you have a VM that does a lot of disk reading you can reduce the

amount of disk read traffic across the FT Logging network by using a

special VM parameter. By adding a replay.logReadData = checksum

parameter to the VMX file of the VM, this will cause the secondary VM to

read data directly from the shared disk, instead of having it

transmitted over the FT logging network. For more information on this

see this

knowledgebase article.

IV. Every rose has its thorn

While Fault Tolerance is a useful technology, it does have many

requirements and limitations that you should be aware of. Perhaps the

biggest is that it currently only supports single vCPU VMs, which is

unfortunate as many big enterprise applications that would benefit from

FT usually need multiple vCPU’s (vSMP). Don’t let this discourage you

from running FT, however, as you may find that some applications will

run just fine with one vCPU on some of the newer, faster processors that

are available as detailed

here.

Also, VMware has mentioned that support for vSMP will come in a future

release. It’s no easy task trying to keep a single vCPU in lockstep

between hosts and VMware developers need more time to develop methods to

try and keep multiple vCPUs in lockstep between hosts. Additional

requirements for VMs and hosts are as follows:

Host requirements:

- CPUs: Only recent HV-compatible processors (AMD Barcelona+, Intel Harpertown+), processors must be the same family

- All hosts must be running the same build of VMware ESX

- Storage: shared storage (FC, iSCSI, or NAS)

- Hosts must be in an HA-enabled cluster

- Network and storage redundancy to improve reliability: NIC teaming, storage multipathing

- Separate VMotion NIC and FT logging NIC, each Gigabit Ethernet (10

GB recommended). Hence, minimum of 4 NICs (VMotion, FT Logging, two for

VM traffic/Service Console)

- CPU clock speeds between the two ESX hosts must be within 400 Mhz of each other.

VM requirements:

- VMs must be single-processor (no vSMP)

- All VM disks must be “thick” (fully-allocated) and not thin; if a VM

has a thin disk it will be converted to thick when FT is enabled.

- No non-replayable devices (USB, sound, physical CD-ROM, physical floppy, physical Raw Device Mappings)

- Make sure paravirtualization is not enabled by default (Ubuntu Linux 7/8 and SUSE Linux 10)

- Most guest operating systems are supported with the following

exceptions that apply only to hosts with third generation AMD Opteron

processors (i.e. Barcelona, Budapest, Shanghai): Windows XP (32-bit),

Windows 2000, Solaris 10 (32-bit). See this KB article for more.

In addition to these requirements your hosts must also be licensed to

use the FT feature, which is only included in the Advanced, Enterprise

and Enterprise Plus editions of vSphere.

V. How to use Fault Tolerance in your environment

Now that you know what FT does, you’ll need to decide how you will

use it in your environment. Because of high overhead and limitations of

FT you will want to use it sparingly. FT could be used in some cases to

replace existing Microsoft Cluster Server (MSCS) implementations, but

it’s important to note what FT does not do, which is to protect against

application failure on a VM. It only protects against a host failure.

If protection for

application failure is something you need,

then a solution like MSCS would be better for you. FT is only meant to

keep a VM running if there is a problem with the underlying host

hardware. If protecting against an

operating system

failure is something you need, than VMware High Availability (HA) is

what you want, as it can detect unresponsive VMs and restart them on the

same host server.

FT and HA can be used together to provide maximum protection. If both

the primary host and secondary host failed at the same time, HA would

restart the VM on another operable host and spawn a new secondary VM.

VI. Important notes

One important thing to note: If you experience an OS failure on the

primary VM, like a Windows Blue Screen Of Death (BSOD), the secondary VM

will also experience the failure as it is an identical copy of the

primary. The HA virtual machine monitor will detect this, however,

restart the primary VM, and then spawn a new secondary VM.

Another important note: FT does not protect against a storage

failure. Since the VMs on both hosts use the same storage and virtual

disk file it is a single point of failure. Therefore it’s important to

have as much redundancy as possible to prevent this, such as dual

storage adapters in your host servers attached to separate switches,

known as multi-pathing). If a path to the SAN fails on one host, FT will

detect this and switch over to the secondary VM, but this is not a

desirable situation. Furthermore if there was a complete SAN failure or

problem with the VM’s LUN, the FT feature would not protect against

this.

VII. So should you actually use FT? Enter SiteSurvey

Now that you’ve read all this, you might be wondering if you meet the

many requirements to use FT in your own environment. VMware provides a

utility called

SiteSurvey

that will look at your infrastructure and see if it is capable of

running FT. It is available as either a Windows or Linux download and

once you install and run it, you will be prompted to connect to a

vCenter Server. Once it connects to the vCenter Server you can choose

from your available clusters to generate a SiteSurvery report that shows

whether or not your hosts support FT and if the hosts and VMs meet the

individual prerequisites to use the feature.

You can also click on links in the report that will give you detailed

information about all the prerequisites along with compatible CPU

charts. These links go to VMware’s website and display the

help document for the SiteSurvey utility, which is full of great information, including some of the following prerequisites for FT.

- The vLockstep technology used by FT requires the physical processor

extensions added to the latest processors from Intel and AMD. In order

to run FT, a host must have an FT-capable processor, and both hosts

running an FT VM pair must be in the same processor family.

- When ESX hosts are used together in an FT cluster, their processor

speeds must be matched fairly closely to ensure that the hosts can stay

in sync. VMware SiteSurvey will flag any CPU speeds that are different

by more than 400 MHz.

- ESX hosts running the FT VM pair must be running at least ESX 4.0, and must be running the same build number of ESX.

- FT requires each member of the FT cluster to have a minimum of two

NICs with speeds of at least 1 Gb per second. Each NIC must also be on

the same network.

- FT requires each member of the FT cluster to have two virtual NICs,

one for logging and one for VMotion. VMware SiteSurvey will flag ESX

hosts which do not contain as least two virtual NICs.

- ESX hosts used together as a FT cluster must share storage for the

protected VMs. For this reason VMware SiteSurvey lists the shared

storage it detects for each ESX host and flags hosts that do not have

shared storage in common. In addition, a FT-protected VM must itself be

stored on shared storage and any disks connected to it must be shared

storage.

- At this time, FT only supports single-processor virtual machines.

VMware SiteSurvey flags virtual machines that are configured with more

than one processor. To use FT with those VMs, you must reconfigure them

as single-CPU VMs.

- FT will not work with virtual disks backed with thin-provisioned

storage or disks that do not have clustering features enabled. When you

turn on FT, the conversion to the appropriate disk format is performed

by default.

- Snapshots must be removed before FT can be enabled on a virtual

machine. In addition, it is not possible to take snapshots of virtual

machines on which FT is enabled.

- FT is not supported with virtual machines that have CD-ROM or floppy

virtual devices backed by a physical or remote device. To use FT with a

virtual machine with this issue, remove the CD-ROM or floppy virtual

device or reconfigure the backing with an ISO installed on shared

storage.

- Physical RDM is not supported with FT. You may only use virtual RDMs.

- Paravirtualized guests are not supported with FT. To use FT with a

virtual machine with this issue, reconfigure the virtual machine without

a VMI ROM.

- N_Port ID Virtualization (NPIV) is not supported with FT. To use FT

with a virtual machine with this issue, disable the NPIV configuration

of the virtual machine.

Below is some sample output from the SiteSurvey utility showing host

and VM compatibility with FT and what features and components are

compatible or not:

Another method for checking to see if your hosts meet the FT

requirements is to use the vCenter Server Profile Compliance tool. To

use this method, select your cluster in the left pane of the vSphere

Client, then in the right pane select the Profile Compliance tab. Click

the Check Compliance Now link and it will begin checking your hosts for

compliance including FT as shown below:

VIII. Are we there yet? Turning on Fault Tolerance

VIII. Are we there yet? Turning on Fault Tolerance

Once you meet the requirements, implementing FT is fairly simple. A

prerequisite for enabling FT is that your cluster must have HA enabled.

You simply select a VM in your cluster, right-click on it, select Fault

Tolerance and then select “Turn On Fault Tolerance.”

A secondary VM will then be created on another host. Once it’s

complete you will see a new Fault Tolerance section on the Summary tab

of the VM that will display information including FT status, secondary

VM location (host), CPU and memory in use by the secondary VM, the

secondary VM lag time (how far behind the primary it is in seconds) and

the bandwidth in use for FT logging.

Once you have enabled FT there are alarms available that you can use

to check for specific conditions such as FT state, latency, secondary VM

status and more.

VIII. Fault Tolerance tips and tricks

Some additional tips and tidbits that will help you understand and implement FT are listed below.

- Before you enable FT be aware of one important limitation, VMware

currently recommends that you do not use FT in a cluster that consists

of a mix of ESX and ESXi hosts. The reason is that ESX hosts might

become incompatible with ESXi hosts for FT purposes after they are

patched, even when patched to the same level. This is a result of the

patching process and will be resolved in a future release so that

compatible ESX and ESXi versions are able to interoperate with FT even

though patch numbers do not match exactly. Until this is resolved you

will need to take this into consideration if you plan on using FT and

make sure you adjust your clusters that will have FT enabled VMs so they

only consist of only ESX or ESXi hosts and not both.

- VMware spent a lot of time working with Intel/AMD to refine their

physical processors so VMware could implement its vLockstep technology,

which replicates non-deterministic transactions between the processors

by reproducing the CPU instructions on the other processor. All data is

synchronized so there is no loss of data or transactions between the two

systems. In the event of a hardware failure you may have an IP packet

retransmitted, but there is no interruption in service or data loss as

the secondary VM can always reproduce execution of the primary VM up to

its last output.

- FT does not use a specific CPU feature but requires specific CPU

families to function. vLockstep is more of a software solution that

relies on some of the underlying functionality of the processors. The

software level records the CPU instructions at the VM level and relies

on the processor to do so; it has to be very accurate in terms of timing

and VMware needed the processors to be modified by Intel and AMD to

ensure complete accuracy. The SiteSurvey utility

simply looks for certain CPU models and families, but not specific CPU

features, to determine if a CPU is compatible with FT. In the future,

VMware may update its CPU ID utility to also report if a CPU is FT

capable.

- Currently there is a restriction that hosts must be running the same

build of ESX/ESXi; this is a hard restriction and cannot be avoided.

You can use FT between ESX and ESXi as long as they are the same build.

Future releases may allow for hosts to have different builds.

- VMotion is supported on FT-enabled VMs, but you cannot VMotion both

VMs at the same time. Storage VMotion is not supported on FT-enabled

VMs. FT is compatible with Distributed Resource Scheduler (DRS) but will

not automatically move the FT-enabled VMs between hosts to ensure

reliability. This may change in a future release of FT.

- In the case of a split-brain scenarios (i.e. loss of network

connectivity between hosts) the secondary VM may try and become the

primary resulting in two primary VMs running at the same time. This is

prevented by using a lock on a special FT file; once a failure is

detected both VMs will try and rename this file, and if the secondary

succeeds it becomes the primary and spawns a new secondary. If the

secondary fails because the primary is still running and already has the

file locked, the secondary VM is killed and a new secondary is spawned

on another host.

- You can use FT on a vCenter Server running as a VM as long as it is running with a single vCPU.

- There is no limit to the amount of FT-enabled hosts in a cluster,

but you cannot have FT-enabled VMs span clusters. A future release may

support FT-enabled VMs spanning clusters.

- There is an API for FT that provides the ability to script certain actions like disabling/enabling FT using PowerShell.

- The four FT-enabled VM limit is per host, not per cluster, and is not a hard limit, but is recommended for optimal performance.

- The current version of FT is designed to be used between hosts in

the same data center, and is not designed to work over wide area network

(WAN) links between data centers due to latency issues and failover

complications between sites. Future versions may be engineered to allow

for FT usage between external data centers.

- Be aware that the secondary VM can slow down the primary VM if it is

not getting enough CPU resources to keep up. This is noticeable by a

lag time of several seconds or more. To resolve this try setting a CPU

reservation on the primary VM which will also be applied to the

secondary VM and will ensure they will run at the same CPU speed. If the

secondary VM slows down to the point that it is severely impacting the

performance of the primary VM, FT between the two will cease and a new

secondary will be found on another host.

- When FT is enabled any memory limits on the primary VM will be

removed and a memory reservation will be set equal to the amount of RAM

assigned to the VM. You will be unable to change memory limits, shares

or reservations on the primary VM while FT is enabled.

- Patching hosts can be tricky when using the FT feature because of

the requirement that the hosts must have the build level. There are two

methods you can use to accomplish this. The simplest method is to

temporarily disable FT on any VMs that are using it, update all the

hosts in the cluster to the same build level and then reenable FT on the

VMs. This method requires FT to be disabled for a longer period of

time; a workaround if you have four or more hosts in your cluster is to

VMotion your FT enabled VMs so they are all on half your ESX hosts. Then

update the hosts without the FT VMs so they are the same build levels.

Once that is complete disable FT on the VMs, VMotion them to the updated

hosts, reenable FT and a new secondary will be spawned on one of the

updated hosts that has the same build level. Once all the FT VMs are

moved and reenabled, update the remaining hosts so they are the same

build level, and then VMotion the VMs so they are balanced among your

hosts.

IX. And there’s more! Additional resources

We’ve provided you with a lot of information on the new FT feature

that should help you understand how it works, how to set it up ,and how

use it. For even more information on FT you can check out the following

resources:

VMware White Papers:

Documentation:

Multimedia:

Utilities

VMworld sessions:

Additional Information:

VMware KB Articles:

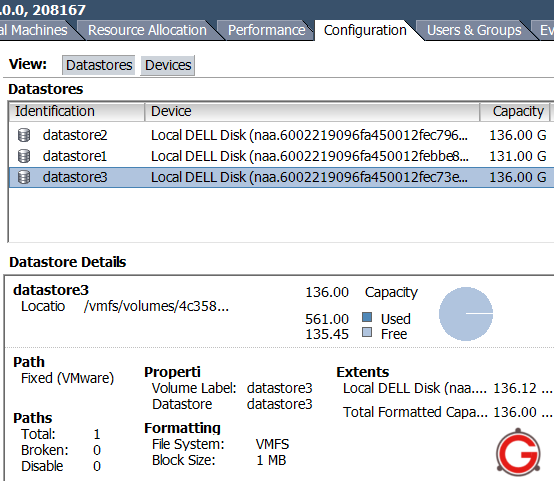

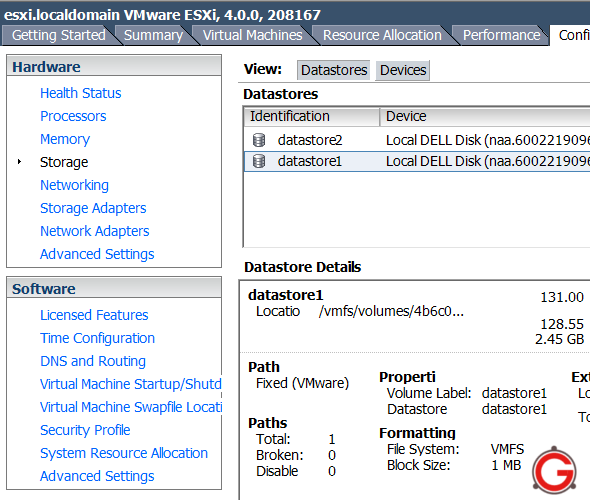

Fig: Vmware ESX Datastores

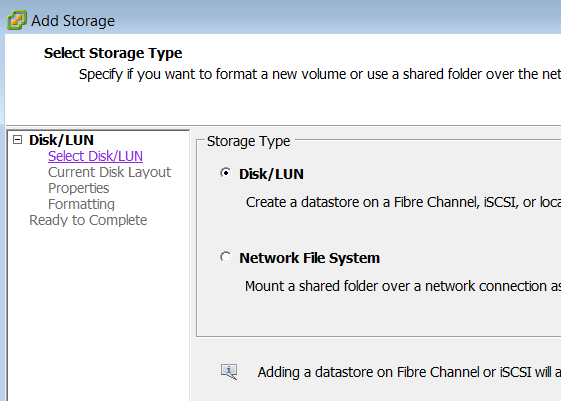

Fig: Vmware ESX Datastores Fig: Select ESXi Storage Type – Disk/LUN

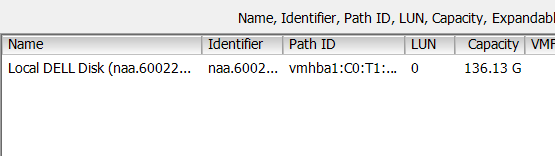

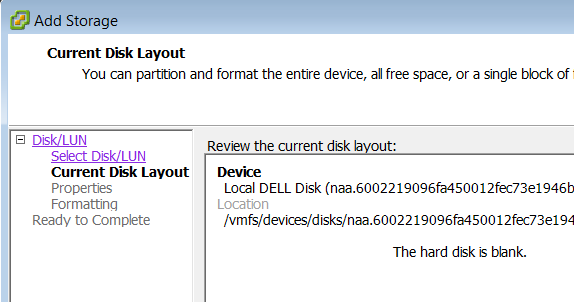

Fig: Select ESXi Storage Type – Disk/LUN Fig: vSphere VMware Select disk

Fig: vSphere VMware Select disk Fig: VMware VMFS Disk Layout Configuration

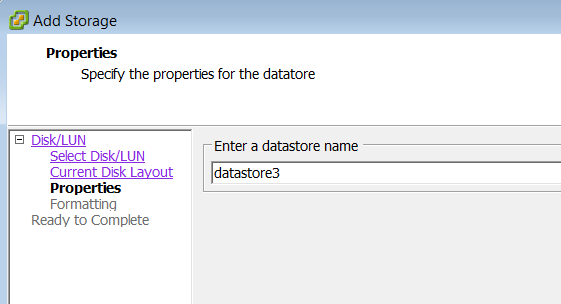

Fig: VMware VMFS Disk Layout Configuration Fig: VMFS datastore Name

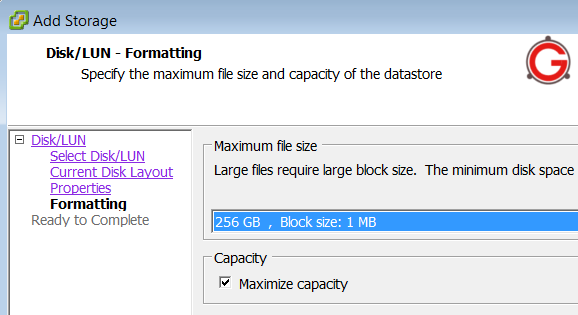

Fig: VMFS datastore Name Fig: VMware Datastore Disk Formatting

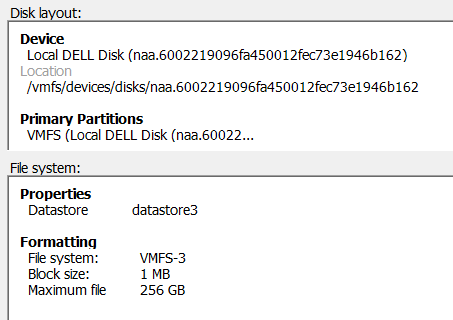

Fig: VMware Datastore Disk Formatting Fig: VMware Datastore creation confirmation

Fig: VMware Datastore creation confirmation